Experiential Retail Is The Future

The retail industry is undergoing a major transformation as e-commerce disrupts traditional brick-and-mortar store models and gives rise to new modes of “experiential retail.” By creating a more immersive retail experience, retailers can drive people towards their stores and ensure they leave not just with your products but also memories. A key element of experiential retail is the innovative use of technology to provide interactive and immersive experiences.

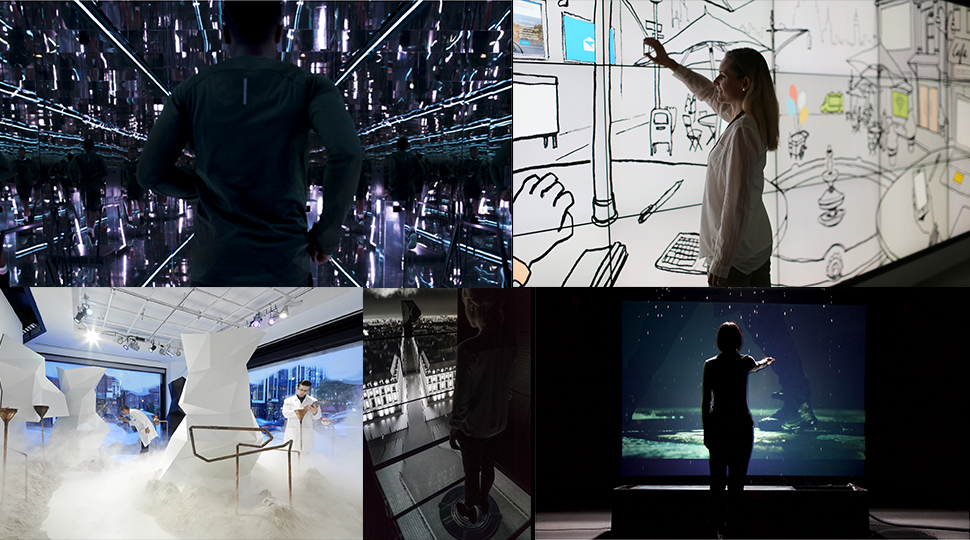

Moodboard - experience marketing